Lesson 18 Parameter Adjustment Method

Deep Learning utilizes a network with multiple layers, and adjust the internal parameters so that the error between the output values from the input values and the corresponding teaching data is closer to zero. THis approach is called Parameter Adjustment.

Stochastic Gradient Descent(SGD) is one of methods to adjust parameters of each artificial neuron.

The purpose of deep learning is to achieve closer weights and biases to the teaching data. Now, we are discussing weights, but the same approach can be applied to biases.

The following is the update formula for weights.

Where, is weight, and is error between the output and teaching data.

is learning rate. We recognize as a function of , and modify to the negative direction of the gradient. The system iterates this operation, and finally the value goes to the location where the gradient is 0.

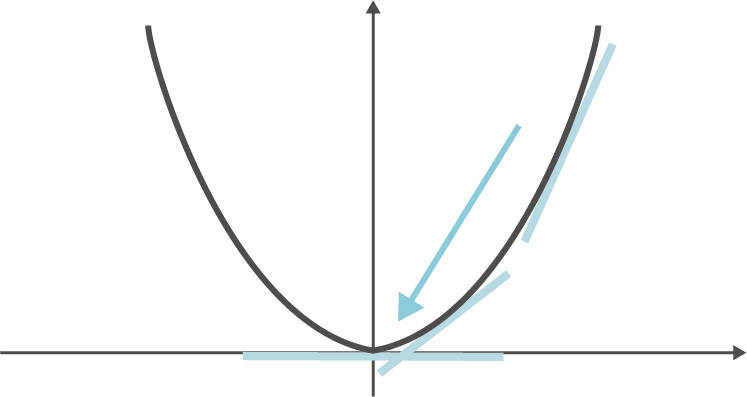

Now, we suppose a quadratic function so that we can feel how it works intuitively.

When the current location is far from where the gradient is 0, the system changes the weight greater. On the other hand, the closer to the target the location is, the smaller the modification is. Finally, the location goes to the target.

Various types of activation functions are proposed. It's very important to select a differentiable one, so that the system can adjust the parameters.